POSETTE: An Event for Postgres 2026 will happen virtually Jun 16-18, 2026. Call for Speakers is open! 🔔

Distributed Scale

Scale Postgres by distributing data & queries. You can start with a single Citus node, then add nodes & rebalance shards when you need to grow.

Parallelized Performance

Speed up queries by 20x to 300x (or more) through parallelism, keeping more data in memory, higher I/O bandwidth, and columnar compression.

Power of Postgres

Citus is an extension (not a fork) to the latest Postgres versions, so you can use your familiar SQL toolset & leverage your Postgres expertise.

Simplified Architecture

Reduce your infrastructure headaches by using a single database for both your transactional and analytical workloads.

Open Source

Download and use Citus open source for free. You can manage Citus yourself, embrace open source, and help us improve Citus via GitHub.

Managed Database Service

Focus on your application & forget about your database. Run your app on Citus in the cloud with Azure Cosmos DB for PostgreSQL.

Citus = Postgres At Any Scale

The Citus database gives you the superpower of distributed tables. Because Citus is an open source extension to Postgres, you can leverage the Postgres features, tooling, and ecosystem you love. And thanks to schema-based sharding you can onboard existing apps with minimal changes and support entirely new workloads like microservices. With Citus, you can scale from a single node to a distributed cluster, giving you all the greatness of Postgres—at any scale.

CAPABILITIES TABLE

Applications That Love Citus

- MULTI-TENANT SAAS

SaaS apps often have a natural dimension on which to distribute data across nodes—dimensions such as tenant, customer, or account_id. Which means SaaS apps have a data model that is a good fit for a distributed database like Citus: just shard by tenant_id—and for cases with no natural distribution key, you can use schema-based sharding.

Features for Multi-tenant SaaS applications

- Transparent sharding in the database layer

- SQL query & transaction routing

- Easy to add nodes & rebalance shards

- Able to scale out without giving up Postgres

- REAL-TIME ANALYTICS

Customer-facing real-time analytics dashboards need to deliver sub-second query responses to 1000s of concurrent users, while simultaneously ingesting fresh data and enabling users to query the fresh data in real time, too.

By scaling out Postgres across multiple nodes, Citus gives your analytics dashboards the compute, memory, and performance they need to process billions of events in real time.

Features for Real-time analytics dashboards

- Ingest event data in

real-time - Low latency for customer-facing dashboards

- Massively parallel UPDATEs/DELETEs

- Ingest event data in

- TIME SERIES

Whether your app works with financial data, website analytics, IOT data, ad tech, or any other type of monitoring data—time series workloads often need to grow beyond the memory, compute, and disk of a single node.

Citus gives you parallelism, a distributed architecture, and columnar compression to deal with large data volumes—and can be combined with PostgreSQL’s time partitioning to optimize your timeseries queries.

Features for Time series workloads

- Sub-second query responses for billions of events

- Citus columnar for compression of older data

- Integrated with native Postgres partitioning (& pg_partman)

- MICROSERVICES

Citus supports schema-based sharding, which allows distributing regular database schemas across many machines. This sharding methodology fits nicely with typical microservices architecture, where each microservice can have its own schema in a shared distributed database.

Schema-based sharding is an easier model to adopt, create a new schema and just set the search_path in your service and you’re ready to go.

Advantages of using Citus for microservices:

- Ingest strategic business data from microservices into common distributed tables for analytics

- Efficiently use hardware by balancing services on multiple machines

- Isolate noisy services to their own nodes

- Easy to understand sharding model

- Quick adoption

Ready to get started?

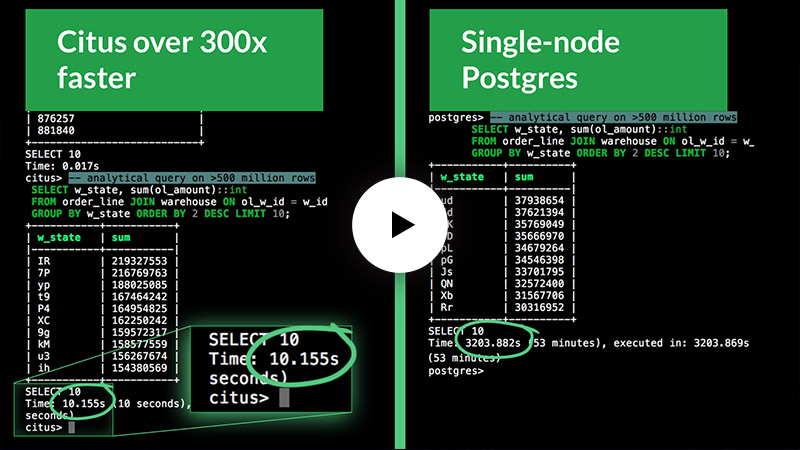

Why Shard Postgres? Performance

See how Citus gives this application ~20X faster transactions and

A side-by-side comparison of Citus vs. single-node Postgres, comparing the performance of transactions, analytical queries, and analytical queries with rollups.

How to Get Citus

Citus Open Source

With Citus, you extend Postgres with superpowers like distributed tables, distributed SQL query engine, columnar, & more.

Citus on Azure

You can also spin up a Citus cluster in the cloud on Azure with Azure Cosmos DB for PostgreSQL.